On one end of the line, Taiwanese uncles and aunties read about the newfound healing powers of “yam leaf milk,” an elixir that purportedly prevents high blood pressure, high blood sugar and cholesterol. On the other end are the “merchants” fabricating such stories, who use the resulting income to buy cars and mansions.

Attracted by provocative and controversial headlines they see on platforms such as Google, Facebook and LINE, these unsuspecting uncles and aunties are contributing clicks to “content farms” — companies that produce massive amounts of low-quality news articles in order to collect money from page clicks.

A team at The Reporter spent two months investigating this phenomenon, following a trail which began in public LINE groups and ultimately revealed a transnational money-making machine which has operated for at least six years between Taiwan, Singapore, Malaysia, Hong Kong and China.

After gaining membership to their Telegram, WhatsApp and Facebook groups, we checked the list of members and discovered a motley crew of Taiwanese and overseas Chinese individuals, from a member of a Taiwanese pro-unification political party, to a businessman living in Kaohsiung who founded the well-known Taiwanese information warfare websites Ghost Island News (鬼島狂新聞) and Blue-White Sandals Counterattack (藍白拖的逆襲).

At the end of November, next to the Wanhua Railway Station in Taipei, between forty and fifty elderly people sat in a community activity center. But they weren’t playing cards or singing songs that day. On each table was a pamphlet reading “A Guide to Checking Fake News” and the audience listened attentively to the presenter.

“Okay everyone, when you wake up, the first thing you do is check LINE, right?” the 78 year old Ivy asks. The elderly respond in unison like schoolchildren: “right!”

“If you’ve been scammed before, can you raise your hand?” Ivy asked. The atmosphere suddenly chills. After a second someone laughs, and the words “fake news” undulate in the crowd as a few hands are slowly raised into the air. “Let’s give them a round of applause!” Ivy said, encouraging them to find the courage to admit past mistakes.

At the end of last year, Ivy and three other partners established the Fakenews Cleaner (假新聞清潔劑). Since then, with the help of over seven hundred multi-generational volunteers from towns and cities of varying sizes, Ivy and her team have travelled around Taiwan giving over a hundred public presentations in community spaces, temples, and traditional markets. These volunteers form local fake news self-help groups, and report questionable news that appears on LINE every day.

It’s the Fakenews Cleaner team that tells us about the cure-all elixirs circulatingon LINE that most Taiwanese have never heard of:

Yam leaf milk: cures high blood pressure, high blood sugar, high cholesterol, and gout Cilantro water: cleanse, detox, and lower blood pressure Bitter leaf: lowers blood pressure, cures diabetes, lowers cholesterol, protects liver, cures cancer

These snake oil recipes are posted on websites with innocuous names, such as Jintian Toutiao (not to be confused with the ByteDance news app Jinri Toutiao), KanWatch, and beeper.live. A visit to the terms of service on these websites state that they are not responsible for the veracity of content on their platforms. But in today’s Taiwan, middle-aged and elderly who primarily get their news from LINE often accept what they read as truth. Many of the seniors that the Fakenews Cleaner team had met with say they’ve seen similar claims of cure-all elixirs like the ones described above. “Once during a presentation in Zhudong we realized that the entire village had been drying bitter leaves to make tea” said Ivy. In Nantou county, a village community development association would host yam leaf tea drinking events every morning.

“The situation was worse than we could have imagined, and I became increasingly frightened,” says Melody, a volunteer with Fake News Cleaner. In their interactions with the elderly, volunteers sometimes play the role of a private tutor, giving them one-on-one counselling in resolving questions about digital products, and while doing so, they have incidentally witnessed the disaster brewing in LINE groups for the elderly.

“For some people, as soon as they open LINE, the screen becomes filled with news from forty to fifty content farm groups,” says Mark, a Fakenews Cleaner volunteer. “During class, some people ask us why we are trying to censor them.” These content farms fold false and disputed information into their content. The content is often propagated through web links that, once clicked, require the user to subscribe to that content farm’s LINE account before viewing. The user then receives daily news from the content farm.

A content farm (or content mill) is a website that specializes in producing high-traffic articles, videos and images with very little original content and generates revenue via advertising. The truth behind their claims are often difficult to verify, and their creators use various legal and illegal means to create said content. Content mills do not proactively manage content, and much of it violates copyright, is plagiarized or minimally rewritten.

Volunteers for Fake News Cleaner from all over Taiwan have realized that the disinformation is highly coordinated, strategic and effective. “Often, all within a day or two, we will see the same viral content being re-shared from all over. It’s really very scary” says Mark. Elderly people who live alone in villages often have no other way than to ask these volunteers to verify whether content they see is correct or incorrect. It only takes a single slip-up for them to become victims of disinformation.

Fact-checking group MyGoPen -- which sounds similar to the Taiwanese Hokkien phrase mài koh phiàn (嘜擱騙) or “don't be fooled again” -- has over 180,000 followers on its official LINE group. The founders of the group were concerned about the flurry of disinformation on social media and started MyGoPen in 2015. Since then, the group has put together a substantial database of stories with false information.

Robin, a project manager at MyGoPen, noted that most content farms do not operate locally in Taiwan, with at least 60 percent of disinformation or disputed news stories originating from overseas. Some of the images contain simplified characters, or phrases used only in China, or official pronouncements by the Chinese government. MyGoPen has already examined more than 900 items; their program automatically compares news items with those in the database, and the user can view the results directly when a match occurs.

But after they wrote this program, their opponents carried out a counterattack. In the early morning, some users would take fake news known by the MyGoPen database and tweak the titles, images, videos and text. If the program did not recognize the altered version, then they would begin to disseminate it instead.

The battle between the two sides rages on. Robin has observed that a large number of articles with very similar content would tend to appear before elections. For example, during the Hong Kong anti-extradition bill protests, a wave of rumours circulated online that claimed protesters were promising rewards of $20 million HKD ($2.5 million USD) for each police officer killed.

In the half-year before Taiwan’s 2020 presidential and legislative elections, content portraying China favourably bubbled up in Taiwanese LINE groups, such as news of Chinese “flying trains” that could reach speeds of 4000 km per hour (3 times the speed of airplanes) or high speed trains with carriages made of bamboo. Pointing out an article containing disinformation which was shared by 180,000 users, Robin says, “this is an unending war.”

We followed the trail of these alluring stories to find out where they come from, leading us all the way to Malaysia.

On December 13, 2019, Facebook removed 118 fan pages, 99 groups, and put restrictions on 51 accounts, citing violations to their community standards.

Two days earlier, Yee Kok Wai (余國威) from Puchong, Malaysia, would be the first to cry foul, posting on his timeline that Facebook had notified him that he had violated their community standards. Scrolling back through Yee’s Facebook timeline, The Reporter discovered that he had made similar posts in September, October and November, including that his account was suspended on his birthday so that he was unable to reply to birthday wishes. “Facebook is censoring me to oblivion,” he wrote.

He is a soldier on the opposite side of this “endless war,” the self-appointed chairperson of the Global Chinese Alliance (全球華人聯盟) Facebook page. Yee also owns its associated content farms, fan pages and groups.

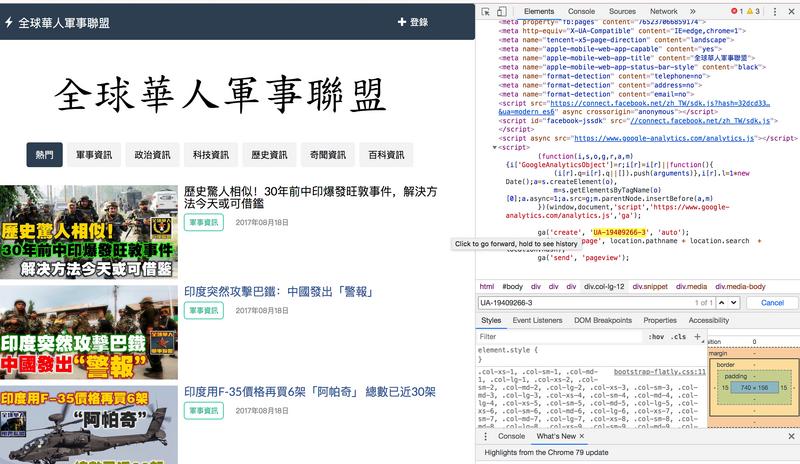

Yee Kok Wai began this line of work on December 17, 2014 when he established a series of Facebook pages and groups, with many under names beginning with “Global Chinese” (全球華人), such as the Global Chinese Military Alliance (全球華人軍事聯盟) and the Heavy Duty Scooter Association (重機車社團). From his own public statements we found that the Facebook pages and groups under his control had a total of 300,000 followers and members.

Though he describes himself as a low-key person, his Facebook posts can be viewed by the public and are shared daily. He rides a red heavy-duty scooter with which he often poses posted photographs. In October of 2019 he posted a photograph of a new BMW523i, valued at over $2 million NTD ($65,700 USD), and wrote “I should buy a new car!”

As chairman of the Global Chinese Alliance, Yee’s heart leans toward China. He celebrated the anniversary of the CCP and expressed support for the Hong Kong police during the anti-extradition bill protests. The entire Global Chinese Alliance media group took the same viewpoint, mass producing content with a pro-China ideology and praising the accomplishments of the Chinese Communist Party. The content that Yee shares via his Facebook pages and groups are largely from content farms such as KanWatch, beeper.live and Qiqu News (奇趣網).

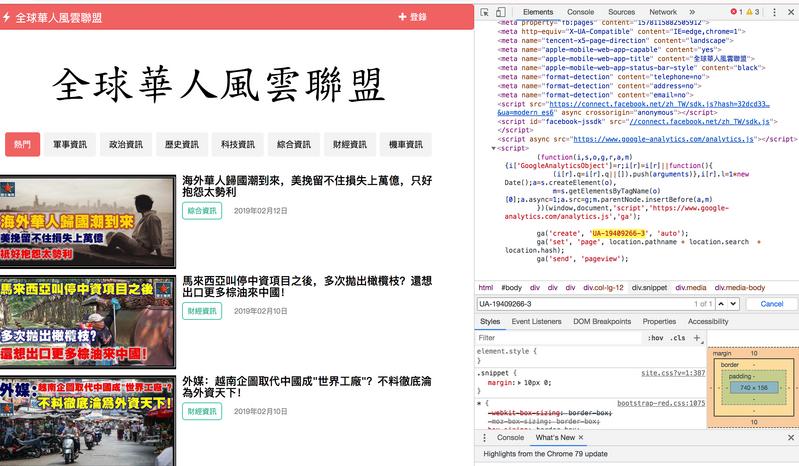

Yee has also dabbled in creating his own content farms, including: the Global Chinese Weather Union (全球華人風雲聯盟) and the Global Chinese Taiwan Union (全球華人台灣聯盟). In 2017 he continued to expand his operations, creating child pages such as Huiqi Worldview (慧琪世界觀), Qiqi World (琪琪看世界), Qiqi News (琦琦看新聞), Qiqi Military (琦琦看軍事), Qiqi Life (琪琪看生活), all featuring the same girl wearing a red bare-shouldered cheongsam.

We confirmed with TeamT5, a company with a long history in the information security industry, that these “Qiqi” fan pages with tens of thousands of followers and the Global Chinese fan pages operated under the same patterns under different names. Both would share content from separate websites called Qiqi World and Qiqi News. The report that TeamT5 sent us not only indicated that they used the same methods to manipulate information, but that their HTML source code was essentially the same.

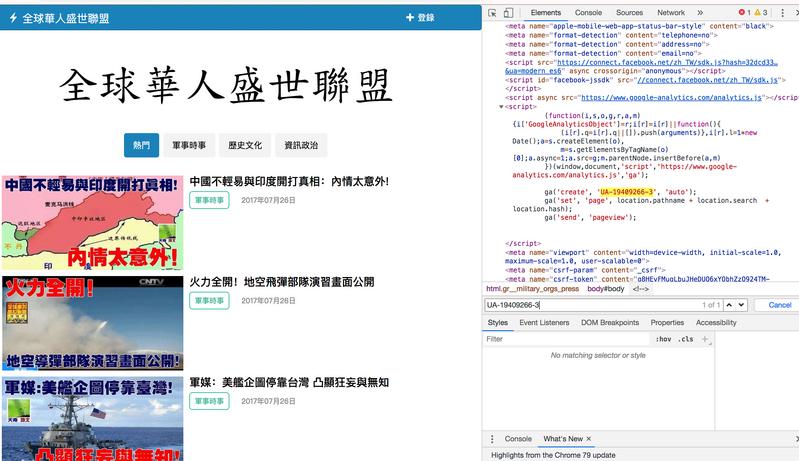

On inspection, we found that the Google Analytics tracking ID associated with these webpages were all the same: UA-19409266. This ID can be used to identify affiliated websites; if two websites use the same Google Analytics tracking ID, this means that the same person or organization is tracking the web traffic through those sites.

The next step we took was to use the tool PublicWWW to search the source code of websites to find the keyword “UA-19409266.” We found 386 other websites using the same Google Analytics ID. First on the list was Jintian Toutiao, a content platform commonly seen in Taiwan.

It turns out, many websites, Facebook pages, and content farms that appear under different names to the average user, could be run by the same people behind the scenes.

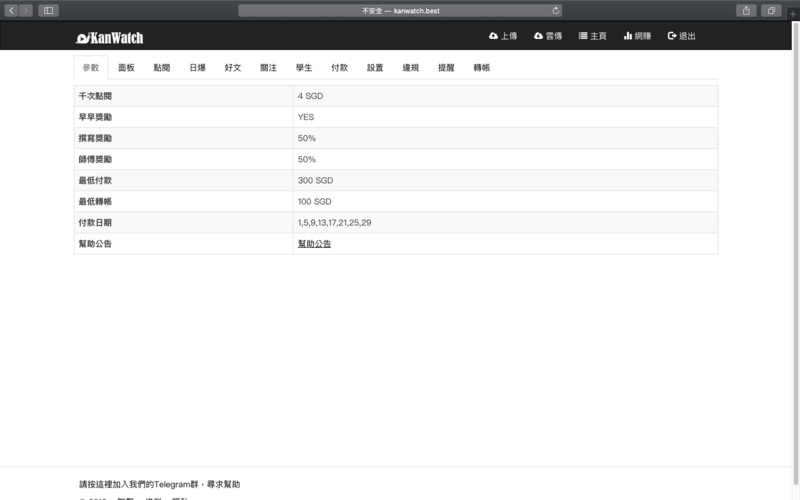

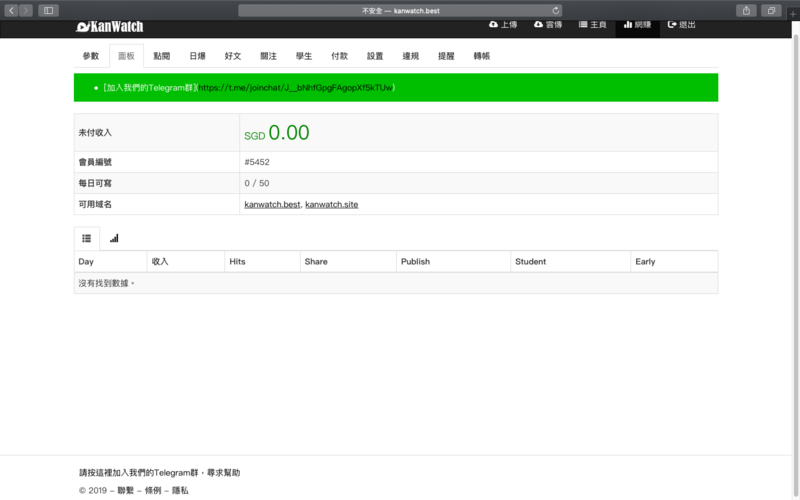

In order to further understand the organization behind this group, journalists from The Reporter created accounts on content platforms beeper.live, which deals in text articles, and KanWatch, which deals in video content (under user IDs 10636 and 5452 respectively).

The platforms were very open, and it only took us a few minutes to register, after filling out some basic information and providing an account on a third-party payment system such as Paypal. The platform is like a common blog for thousands of people, providing a robust set of tools for its users to create drafts, and monitor revenue and viewing statistics. Every day, users on the platform, strangers to each other, make money by separately sharing content on their own social media pages and groups, allowing for wide dissemination.

There are two ways to make money on the platform. The first is to share existing articles. This method is tailored toward newcomers, and many platforms require users to share a certain number of articles before they can create their own. A user can receive around 10 Singapore dollars for every thousand views.

The second way is to write articles. What is unique about the platform is that there is a mechanism for authors to adjust the “profit sharing ratios” which determine how much of the advertising revenue goes toward the user that created the content versus the user who shared it. This vertically integrated direct sale model incentivizes the wide sharing of content.

Users can see the most popular articles of the day, and displayed next to each article are viewer statistics and a clone button so that users can easily rewrite articles.

Success in this industry has little to do with creating content, but rather with creating traffic flow. Having a page or group with a lot of followers who will click on your links is key. Yee Kok Wai’s expertise on this matter shows: the 20,000 users that follow his Facebook pages and click on his shared links form the basis of his business and profits.

Though Yee Kok Wai’s followers are his source of web traffic, he also provides reading material for those with similar political leanings, as well as a place to vent their emotions. The energetic content farm manager saw this as an opportunity to establish a center for setting up online discussions.

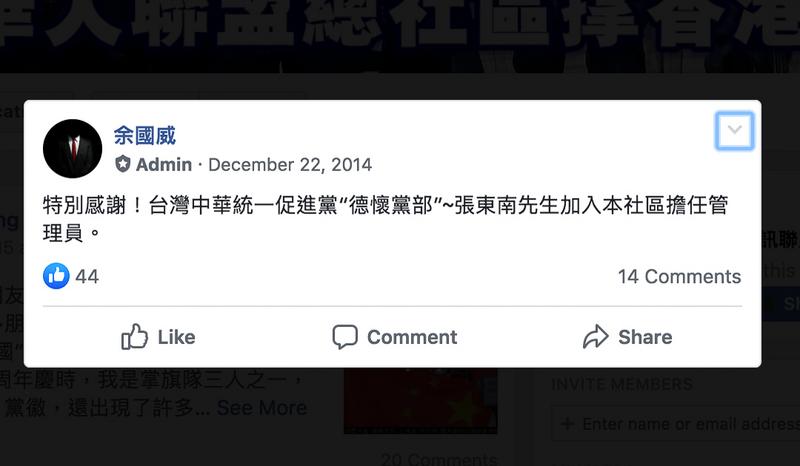

In 2014, Yee Kok Wai started to proactively invite various partners to join his Facebook group. One of those members was Chang Dong-nan (張東南) of the Chinese Unification Promotion Party (CUPP). Yee publicly welcomed Chang into the Global Chinese Alliance with the message: “a special thanks to CUPP member Chang Dong-nan for becoming an administrator for this community!” In 2015, Chang was invited to attend the Communist Party of China’s Central Party School in Beijing, where he was selected to act as a Taiwanese delegate for a study group run by the Association for Promotion of Peaceful Reunification of China (統促會). Since Chang and Yee shared a common political ideology, they naturally shared each other’s posts.

“In Malaysia, making content farms is already an industry. You can make a living just doing that” said independent Malaysian data journalist Kuek Ser Kuang Keng (郭史光慶). There are three major content farms in Malaysia, and in their heyday they could make money just by writing youth-oriented or inspirational articles. Later, when the platforms started to manage content, “a lot of people sold off their fan pages on the cheap,” said Kuek Ser.

Those who didn’t sell are still panning for gold in various content farm platforms.

We found that these mutual strangers who generate content can respond quickly to changes in the environment. In November, when a journalist from The Reporter attempted to create an account on Jintian Toutiao, the registration page asked users to “move over to beeper.live.” Not long after, the Jintian Toutiao registration page vanished. Not only that, the platform rules are repeatedly updated, sometimes opaquely, without a public announcement. On Christmas Eve, page administrators decided to ban political content, and also warned users not to share or create political content, which was very popular at the time.

Who makes these rules? Who creates this system of awards and penalties, and requests that members move to a different platform? We eventually found our way into the platform’s secret base. Here we found Evan, the man behind the curtain, the man who people call “boss.”

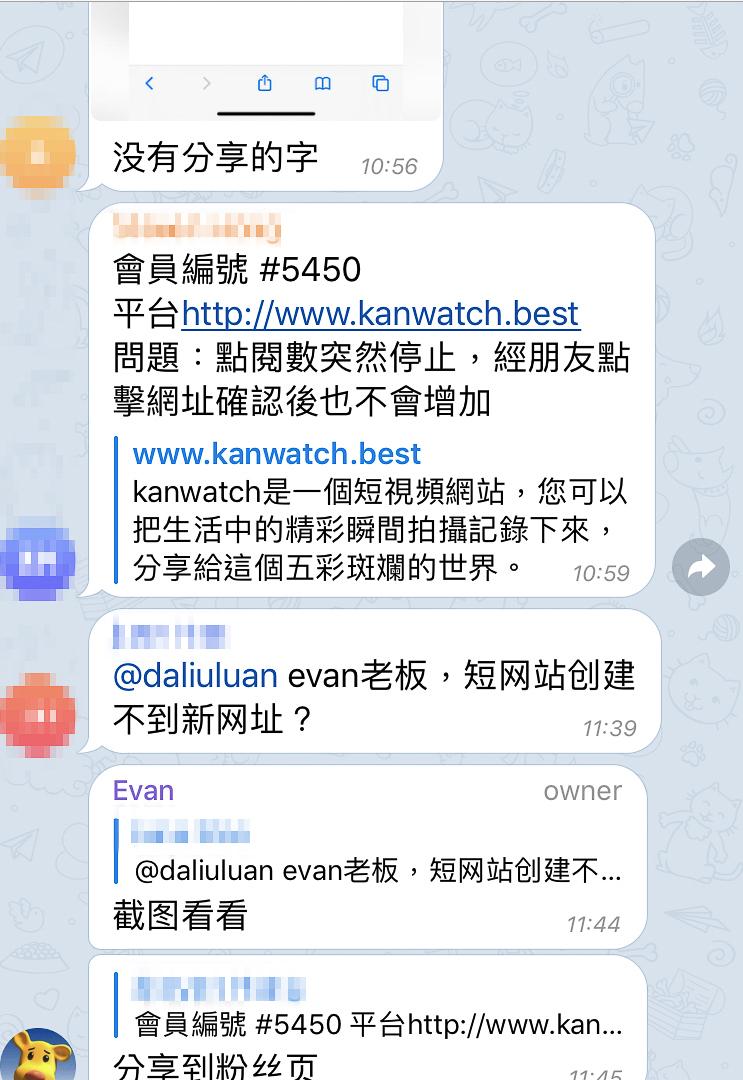

“Are there pros here who know where I can buy Facebook accounts?” “Member #5450 asks: my click rate has suddenly stopped growing, and friends can confirm that when they visit the counter doesn’t increase.” “If you work hard, you’ll be buying a mansion in no time.”

On the encrypted messaging app Telegram, there is lively discussion in a group called “Big Durian” (大榴蓮群). The group has 481 members using a mix of both simplified and traditional characters. Payment can be received via WeChat, Alipay, Paypal, Bank of Singapore, amongst other methods. The members of the group are all content farm operators, and they run their content on platforms such as Qiqu News, KanWatch, beeper.live, and Flashword Alliance (閃文聯盟).

If you search online for Big Durian or Flashword Alliance, you will find instructional videos and blog posts on how to “make ten thousand per month” and “ways to make money.” The earliest post is from 2015, when users started instructing each other how to make money via content farms. There is a Big Durian instructional video on YouTube, and in the comments you can find many people posting invitation links and referral codes. There are also many recent comments saying that Big Durian has already switched to new domain Jintian Toutiao. From this we can deduce that these “businessmen” have been in operation for at least four years.

We quietly observed the group and saw questions ranging from the definition of “content farm” to how to buy fake accounts to how to transfer funds. Someone named Evan -- who users called “the boss” -- would answer. His Telegram username was daliulian, and he would post links to the latest incarnations of the platform, the times when profits would be distributed, and tips on how to make more money, such as: “pay day is tomorrow, everyone work hard” or “buy a fan page for 100,000 and then make a post there. Clicks come fast.”

We found Evan's personal Facebook profile from a screenshot explaining how the content farm works. Aside from a few pictures of cartoons and dogs, he only had a QR code to connect with him on WeChat and a photo of a man he claims is a swindler.

Next to the WeChat invitation he wrote a rare message:

“I invite you to participate in Flashword Alliance: http://www.orgs.pub/, members receive Facebook’s money directly, money for clicks, it’s self-explanatory, you’ll get every penny.”

This was written in 2018. He added that clicks from Hong Kong, the US, Australia and Canada could lead to larger amounts of revenue, about $10 USD per thousand clicks. Clicks from Taiwan were valued at $4 USD per thousand, and clicks from Malaysia at $2 USD per thousand. We attempted to contact Evan on various platforms but did not receive a response.

(Update: After the article was published, Evan reached out to The Reporter to discuss his views on content farms, which have been added to the end of the article.)

Through the contact info on a Facebook page associated with beeper.live (女人幫), we found Evan’s e-mail address, and finally got a glimpse of his domain of operations.

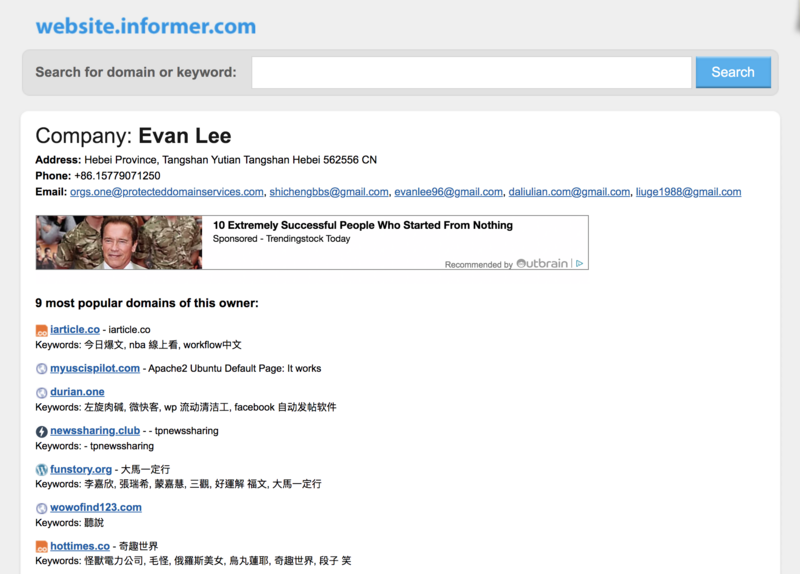

We searched for Boss Evan’s email on Website Informer and DomainBigData and found different addresses under the name Evan Lee, which we then used to trace his steps through markets he had established in various domains. For example, using Evan’s name and the same Google Analytics tracking ID, we saw that he had purchased over 50,000 fan pages on Shicheng (獅城網), the largest free online bulletin in Singapore. He has even created his own multinational news aggregation app and a website for currency conversion.

Using his associated emails, we found that aside from the Google Analytics ID UA-19409266, he also owned the ID UA-59929351. From these two IDs, we found that Evan’s business empire included at least 399 different websites.

Amongst these 399 websites, all of them were content farms producing a variety of different types of content, covering the Singapore, Taiwan, China, Malaysia and Hong Kong markets. Some contained gossip from Hong Kong universities, dramas that Chinese parents liked to watch, or pet memes. There was also content touching on Malaysian, Taiwanese, Hong Kong and Chinese politics. Content on China was largely positive, and adopted a “greater China” ideology. There were also websites about weather and employment in Singapore, exchange rate calculators, and erotic websites.

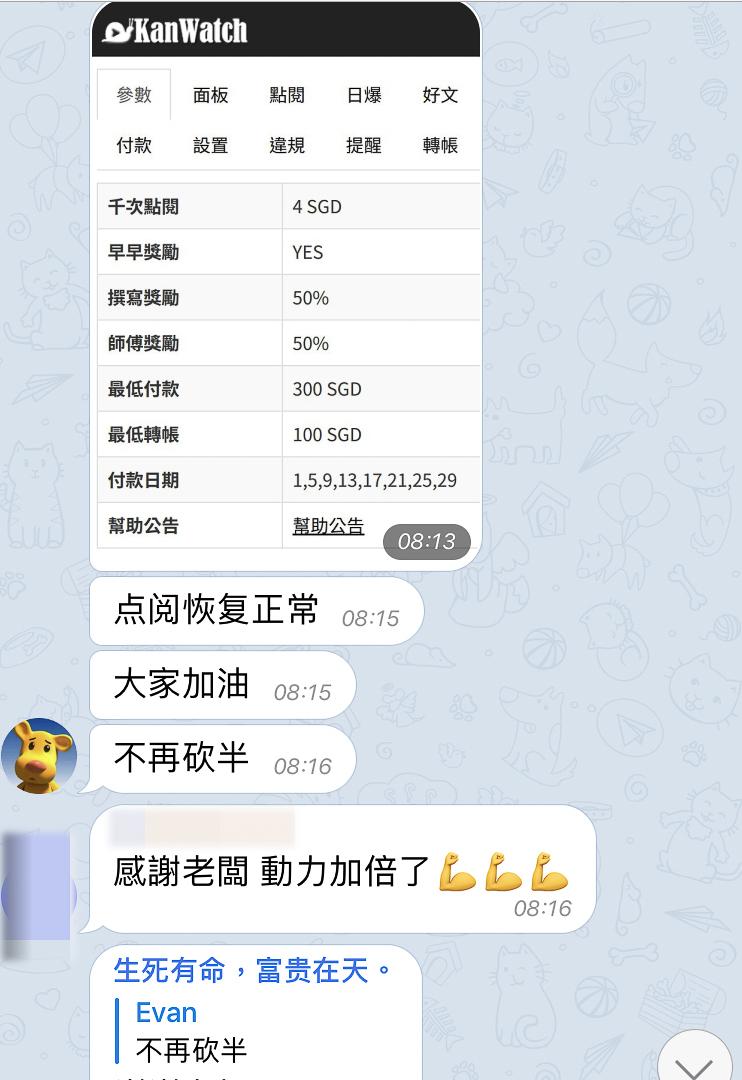

Evan, who has much experience in running content farms, often offers comfort to members in the Telegram group who are anxious about not making money, giving them advice on how to increase traffic flow. He uses his payment messages as encouragement, posting screenshots to members to show that good articles can get more than 300,000 views, earning about 1,200 Singapore dollars or $900 USD (assuming a payment of 4 Singapore dollars per thousand clicks).

On Christmas Day at noon, Boss Evan dressed up as Santa Claus and notified the group that he had issued payments. Aside from thanking the boss, the members also asked if their Facebook click-through rate had recovered. “Not yet,” Evan replied.

In an interview with The Reporter, Chen I-Ju (陳奕儒), Facebook's public policy manager in Taiwan, said Facebook will “algorithmically suppress” websites with “poor user experience,” resulting in lower click-through rates.

But the group came up with solutions to get around this. Take user @ink99 as an example: recently, a Facebook fan page of theirs with 60,000 followers reached a new record of 140,000 hits. User @ink99 suggested that posts should be written with positive language in order to avoid being reported. Another user Yu2 echoed this advice, attributing his page’s 40,000 hits to Facebook’s recommending his links, while others shared strategies such as rotating their accounts and avoiding spam.

Chen said that Facebook monitors activities on the platform and continues to update its community guidelines to reduce the number of fake accounts. But from our observations on the Big Durian group chat, it doesn’t matter whether it’s Facebook or Google Adsense, these users can come up with solutions on how to work around new guidelines.

Evan, who has many years of experience, has changed the rules on his platform so that political articles are no longer allowed, possibly to avoid sensitive topics arising from recent events between China, Hong Kong and Taiwan, but also because such messages have become focal points for detecting disinformation on many platforms. Even so, Evan cannot distance himself from politics. There is a member of Big Durian called “Ghost Island” (鬼島), and his profile picture is the well-known Taiwanese website Ghost Island News (鬼島狂新聞).

Journalists at The Reporter examined the Google Analytics ID behind Ghost Island News, and realized that it was the same as Yee Kok Wai and the Qiqi content farms: UA-19409266(-3). Ghost Island News uses two additional domains, taiwan-madnews.com and ghostislandmadnews.com, both registered under the same email address -- [email protected]. We found the owner of said email address, an individual named A-hua (阿華, pseudonym). Over the phone, A-hua told us that he lives in Kaohsiung.

“That’s right, that was me in Big Durian.” A-hua tells us that Big Durian moderator, Boss Evan, is a Chinese citizen living in Malaysia, and that he runs the platform to help people make advertising money from Google and Facebook. Today, the platform forbids political content, very likely due to an article that A-hua had written a month or two ago.

“Thirty to forty thousand people saw the article within four hours, and that caught Facebook’s attention,” recalls A-hua. Because of this, he nearly couldn’t collect the $400 Singapore dollars he earned from the article. “Evan blamed it all on me.”

Thinking back to his time on the Big Durian platform, A-hua says in his heyday he could make more than $2,000 USD a month. He said the key was to copy articles from mainstream sources and then insert his own opinions. The most important thing was “knowing who your fans are.” For example, on Ghost Island News “you would praise Han Kuo-yu (韓國瑜), say that he had a hundred thousand supporters, then curse Tsai Ing-wen (蔡英文), and the article would do well.” He voted for Han last year, though he says he doesn’t have a party or an ideology, and that writing political articles is just a hobby. When talking about others in his line of work, he admits, “sometimes you have to, you know, make things up.”

For this upcoming election, A-hua said that the ruling Democratic Progressive Party (DPP), the opposition Chinese Nationalist Party (KMT) and Taipei mayor Ko Wen-je’s (柯文哲) Taiwan People’s Party (TPP) have all enlisted their own internet armies. He himself received messages from strange Facebook accounts, asking him to work for both the pan-blue and pan-green camps. The accounts all appeared to be foreigners, but when they realized that A-hua wasn’t interested the accounts would vanish. These methods of contacting him are meant to leave no trace, since according to A-hua, “politicians are very afraid of being associated with these internet armies right now!” Because he had no information on who was behind the messages we could not verify his claims.

He did say, however, that the Japanese news company NHK had come to Kaohsiung to interview him, asking him to give analysis on Taiwan’s political internet battles. Interest in recruiting A-hua stems from his rich experience in the field. He was a participant in the White Justice Union (白色正義聯盟) Facebook page opposing the 2014 Student Sunflower Movement. In 2016 he joined the Association for the Promotion of the Proper Direction for a Lawful Society in the Republic of China (社團法人中華民國端正選風促進會) and helped to organize this pan-blue internet army. He also helped to create Blue-White Slippers Counterattack, a Facebook page which has over 30,000 followers and supports the KMT. His original vision for the group was to strike a moderate pan-blue perspective, but as the members became more and more radical he decided to quit the group.

With years of experience, he says business is getting harder. Because he had created fake news in the past, he has been summoned to court by involved parties and fined more than 30,000 NTD. In this election year, he feels pressure from all directions owing to national security officials and Han Kuo-yu supporters. Even Boss Evan has put pressure on him: “Evan is Chinese, so if you criticize China he will delete your article.”

In his view, if you want to make money manipulating public opinion, then you have to make a lot of noise via images and videos on community platforms to attract a large number of fans. “If you curse someone harshly enough, then someone with money will come find you... the green camp has more money.” This election, A-hua plans to vote for Han Kuo-yu. After political articles were banned on Big Durian, he has switched to ViVi (ViVi視頻), a video content platform from China, to continue to air his political views and conduct his business.

How much can self-employed individuals such as A-hua influence Taiwanese public opinion?

The content farm with the greatest influence in the pan-blue camp is Mission (密訊). After Facebook blocked Mission, fan pages that usually shared their videos switched to sharing videos from Big Durian. We collected two months of statistics and found that a total of 460,000 supporters in 10 pan-blue Facebook pages posted 8,886 times, mostly from the Mission and Ghost Island News fan pages, of which 3,298 articles originated from Big Durian. These numbers provide us with a record of this content farm empire’s footprint in Taiwanese fanpages.

A-hua is optimistic about his prospects. He has 500,000 NTD in “start-up funds” to create his own platform for video content, modeled after Big Durian. He stressed that by working with coders from China, he could continue to dominate Taiwan's political information market at a quarter of Taiwan's cost.

From a single Google Analytics ID and a group with 481 members, Boss Evan created a platform for panning for gold in the internet’s information streams, influencing Taiwanese, Malaysian, Singaporean, Hong Kong and Chinese public opinion in the process. At the same time, there are characters like A-hua who started in content farms and ended up creating their own fan pages and websites.

The shift from text to videos and from people working independently to platforms such as Big Durian, as well as interest in contract work from political parties, will guarantee that content farms with agendas will continue to find our attention.

Amidst the chaos, many people don’t understand why this business even has a market. They are curious, how much influence do they really have in Taiwan?

Cheng Yu-chung (鄭宇君), assistant professor at National Chengchi University College of Communications, uses trends from the past ten years to explain the rise of content farms. In the past, it was easy to distinguish between news reports and advertisements on the page of a newspaper. But as the media engaged in the practice of advertorials, the line gradually blurred, and people's ability to recognize them has decreased.

Second, when people use social media to receive their news, whether or not they decide to read something usually depends on who shared it. That is, they pay less attention to what media company or which author wrote it, which is a boon to content farms and their anonymous authors. As a long-time observer of online debates and political influence operations in Taiwan, Cheng stresses that an accountability mechanism must be established for media and key opinion leaders, otherwise they cannot be held accountable. In an environment where one cannot distinguish between news and information, “it becomes a loophole that Chinese party-state media can exploit.”

The final reason is the way internet platform algorithms work. “Google’s algorithms put a high value on links and click rates. Content farms create articles with SEO (search engine optimization) in mind, but news is created with the intention of reporting facts... When people use these methods to attack Google, they have no way to tell what is real and what is fake.”

On this point, internet platforms insist that they are constantly looking for solutions, including tweaking their algorithms and suspending violators. Taiwan's top three platforms for disinformation -- Google, Facebook and LINE -- have all introduced policies for greater transparency and educating users. At the same time, in order to protect freedom of speech, the task of identifying real and false information has been mostly left to volunteers from a handful of third parties and nonprofits.

These third-party fact-checkers have few resources and little manpower. In the battle against disinformation, this frontline is the most thinly stretched and fatigued.

A worker at one of these third-party organizations which partners with one of the big three social media platforms has told us that it can take up to two months to verify a particular piece of information in the worst case scenario, and that it is even harder if the information contains images or video. The list of content that Facebook sends to them is ranked by priority, but they are not told how this priority is determined nor where the content comes from. As for LINE, since October the fact-checkers have suspected that the agents of disinformation have found a way to stuff the fact-checking lists with actual news, making work even more difficult for them.

One nonprofit told us that cutting off Google AdSense money flows is the key to controlling disinformation, but their attempts to use Google’s reporting mechanism have so far received no response. They warn that even though YouTube has gotten more proactive in fighting disinformation in Taiwan lately, they cannot keep up with the volume and methods of disinformation.

Anita Chen (陳幼臻), a senior associate with Google Taiwan's government affairs and public policy team, said that YouTube uses an automated algorithm to delete harmful content based on the platform's community standards. At the same time, they are developing a mechanism for fighting disinformation, including disclosing government-funded media and displaying disinformation warnings next to search results.

But a third party fact-checker working with Google has said, “their verification mechanism will only affect searches made from their main page, but many Taiwanese view videos directly from LINE.” He has observed that it generally takes tech companies a very long time to establish global policies for detecting malicious users, while the disinformation business can react very quickly. For example, once a video is taken down, a slightly altered video will appear under a new username. Or, if a website is taken down, as long as the content still exists on a server, they can register a new domain at a new address and are back in business.

As the main pipeline by which disinformation is spread in Taiwan, LINE still relies on the work of third parties to serve as their government policy department, and otherwise relies on education as the primary countermeasure for dealing with disinformation.

Behind this endless war is a gap between how information is consumed and how the media and internet platform industries are fundamentally changing. In this newborn “marketplace,” so long as trends don’t reverse, human nature means that this kind of business will forever exist. Will their profits cause a deficit in democracy?

On the frontlines, Fakenews Cleaner has pointed out that disinformation on LINE penetrates communities, temples, political parties, and civic groups, resulting in a polarized society and making rational discussion impossible.

For Roger Do (杜元甫), founder of AutoPolitic, a consultancy agency for online electoral strategy in Asia, these methods are an effective way for political camps to consolidate their existing supporters. “As long as you can attract user attention, Google will pay you, and it is natural to use content farms.” He stresses that the dissemination methods that content farms use can also be used by totalitarian countries. If such countries leverage their national and financial resources to create or influence content on a large scale, they would have the ability to change the numbers on public opinion on a variety of issues.

More importantly, as development in artificial intelligence (AI) continues it will become possible for content farms to automate content generation. He cites a Spanish company as an example: they were able to use AI to search for the best articles and automatically create a corresponding video. The company was recently founded but makes an annual profit of over $600,000 USD, a new winner in the business of information warfare.

The information warfare business moves and reacts quickly, constantly developing new weapons to grab our attention. At the same time, the tech giants who dominate their respective markets whose profits are built on advertising, need to hold their hold customers' attention.

As these tech giants continue to use the same business model to chase profits, these information warfare profiteers continue to compete for our attention, profiting from communities of all stripes. If this gap isn't fixed, then these two parasites will continue to coexist, profiting together inside an ecosystem that is never repaired.

The myth of the health benefits to yam milk tea still circulates on LINE in Taiwan today. In India, similar disinformation has led to the sale of large quantities of fake drugs, leading doctors to volunteer to fight the disinformation. In the United States, false news about vaccines has already affected children’s health. Perhaps only when disinformation becomes a pressing issue in the public eye, will these information warfare profiteers admit that theirbusiness opportunity is also a public crisis.

On December 27th, after the publication of this article, Boss Evan finally accepted our requests for an interview to discuss his views on content farms. He was only willing to conduct an interview via text, and not orally. When we asked if he was a Chinese citizen, he said it was a secret. We chatted for three hours, and he stressed that his work should be separated into two categories.

There is the Flashword Alliance, which is a self-publishing platform open to anyone; Boss Evan says the platform is committed to freedom of expression, and he takes no responsibility for any content. The other websites, KanWatch, beeper.live, and Qiqu News, are content platforms. In his view, the biggest content farm in the world is YouTube.

Below is a first-person summary of our interview with Boss Evan:

When A-hua published a political article which hit number two in number of views after only one day, I thought the risk was too high so I banned it. At the time I knew of two people who made political content, one was Holger Chen (陳之漢) and the other was A-hua. That day I blocked Holger Chen and Ghost Island News, and deleted anything about Tsai Ing-wen, Han Kuo-yu, or Taiwan’s elections.

There are a lot of opposing political ideas on my platforms and I don’t advocate for any of them. Whether or not people use my platform have been paid by the DPP or KMT, I have no idea. There’s too much risk in political content, so I later deleted all content on cross-strait issues or Hong Kong on my content farms. My good friend from Taiwan told me before, not to touch politics. I didn’t pay too much attention because there wasn’t much political content, but now I’ve completely banned politics, and violators get deducted double revenue. But, I haven’t banned politics on the self-publishing platform. Everyone has free speech there, and if the DPP writes something I won’t ban it. There are DPP supporters there, but not as many. I am not trying to influence public opinion, and I haven’t taken money from anyone to write articles.

Who Else Is Doing Content Farms?

There are two kinds of farms. One kind makes money by sharing trivial articles. The other kind has an agenda, what kind of agenda I don’t know, but there’s some kind of motivation behind their actions. Right now, I know about six major content farms run by Chinese; but the article you wrote only mentions the articles I wrote, and makes it seem like I run every content farm on earth.

Fan pages in Taiwan, aside from mainstream media, are all content farms. I know three major Taiwanese content farm companies, with a total of over 100 million followers. Of course, some of their followers are repeats. These companies work together with LINE and Facebook. I don’t want to say who they are, I’m afraid of retaliation. Taiwanese content farms are all companies, with ten or so sharing an office. You can’t get inside, so we don’t know much about them. I just write code for this platform, I don’t write content. Those big companies have a lot of employees, and they do everything themselves. These content generators are the ones who are truly scary, because whatever they write, their boss says, okay. These companies are all Taiwanese, and they have bots that spam people. Spam bots aren’t my domain... I don’t really use LINE.

How Do You Suggest We View Information That Comes From Content Farms?

Farms are just farms, don’t think about it too much. Most content farms are just trying to profit, and have no interest in politics. The political content that appears in content farms is only there because it just so happens that a member has that political view, and doesn’t represent the view of the managers. Political thought is often very extreme and can’t view things objectively. People just take some content that aligns with their views and write about it, and they tend to miss the forest for the trees. For example people have strengths and weaknesses, and people who like them will write about their strengths, people who hate them will write about their weaknesses. It seems like both sides are right, so what’s the problem, but if people write too much, they become biased. I hope you can write objectively and fairly. That essay about yam leaf milk, with 830,000 views on YouTube, is more than the number of clicks I get on my website on any given day. The article only talks about me, but please also say something about the number one content farm, which is YouTube.

To read the Chinese version, please click: 〈LINE群組的假訊息從哪來?跨國調查,追出內容農場「直銷」產業鏈〉.

深度求真 眾聲同行

獨立的精神,是自由思想的條件。獨立的媒體,才能守護公共領域,讓自由的討論和真相浮現。

在艱困的媒體環境,《報導者》堅持以非營利組織的模式投入公共領域的調查與深度報導。我們透過讀者的贊助支持來營運,不仰賴商業廣告置入,在獨立自主的前提下,穿梭在各項重要公共議題中。

今年是《報導者》成立十週年,請支持我們持續追蹤國內外新聞事件的真相,度過下一個十年的挑戰。